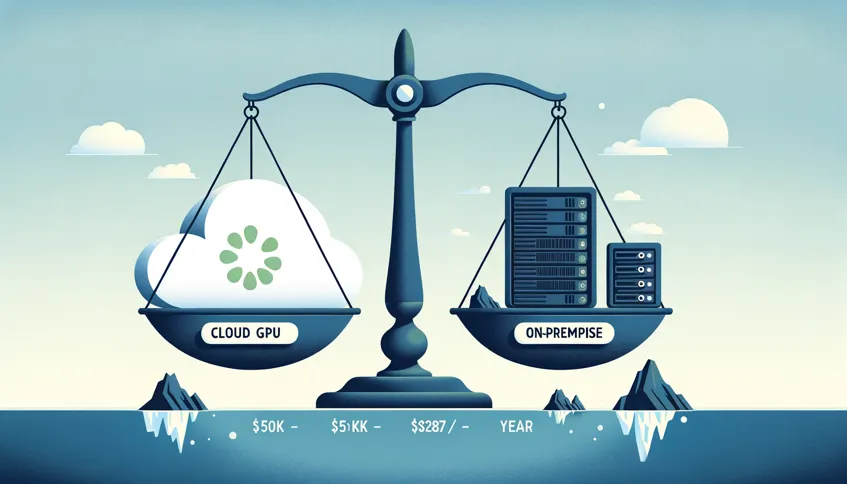

Self-Hosted LLM Cost vs. Performance

Significant cost premiums ($50k-$287k/year) are reported for cloud GPU instances (AWS A10G/A100) running private LLMs compared to commercial APIs. On-premise builds or co-location offer substantial savings. Performance gaps persist, with even expensive self-hosted models lagging behind top-tier APIs like Gemini 2.5 Pro.

Inference Optimization Strategies

Improve self-hosted performance using inference engines (vLLM, TensorRT-LLM) and quantization (FP8, AWQ, GGUF). Optimize KV caching, consider LoRA adapters for reduced memory, and explore batching. For CPU inference, memory bandwidth (channels, speed, DDR5+) and AVX instruction support are critical bottlenecks over raw core count.

RAG vs. Extended Context Windows

Extending context windows to millions of tokens presents challenges: significant VRAM/cost increase and potential retrieval degradation ("lost in the middle"). Retrieval-Augmented Generation (RAG) remains viable for controlling latency, cost, accuracy, and integrating data not present in the base model's training set.

Links:

- https://www.ragie.ai/blog/ragie-on-rag-is-dead-what-the-critics-are-getting-wrong-again

- https://github.com/NirDiamant/RAG_Techniques

- https://www.microsoft.com/en-us/research/blog/lazygraphrag-setting-a-new-standard-for-quality-and-cost/

Model Implementation & Compatibility Challenges

Initial llama.cpp integration for GLM-4-32B showed repetition errors, potentially from conversion issues. Running Qwen2.5-VL via vLLM exhibited problems possibly tied to KV cache quantization (fp8_e5m2) or AWQ quant methods. Microsoft's BitNet b1.58 2B GGUF failed loading in LM Studio initially.

Links:

- https://github.com/THUDM/GLM-4

- https://huggingface.co/microsoft/bitnet-b1.58-2B-4T-gguf

- https://github.com/phildougherty/qwen2.5-VL-inference-openai

Overtraining Impact on Fine-Tuning Adaptability

Research indicates models trained on excessively high token-to-parameter ratios (overtrained) show reduced adaptability during fine-tuning, particularly for dissimilar tasks. This phenomenon, potentially exacerbated by learning rate annealing during pre-training, might explain observed difficulties in fine-tuning newer, heavily trained models compared to earlier generations.

Links: