High-Throughput Inference with Qwen2.5-7B on 2x3090

Achieve ~4500 prompt tokens/s and ~825 generation tokens/s using Qwen2.5-7B W8A8 quantization on 2x 3090 GPUs. Utilizes Aphrodite engine with tensor parallelism (-tp 2) and optimized max_num_seqs=32. W8A8 quantization provided significant speedup over unquantized, AWQ, or GPTQ.

Novel Iterative Search Model: ReZero

ReZero model utilizes GRPO and tool-calling, rewarding retries to improve search result relevance. Achieved 46% performance vs 20% baseline, suggesting repetition isn't necessarily hallucination. Potential use as query generator or abstraction layer. Paper, model, and code available.

Links:

- https://arxiv.org/abs/2504.11001

- https://huggingface.co/Menlo/ReZero-v0.1-llama-3.2-3b-it-grpo-250404

- https://github.com/menloresearch/ReZero

SFT Can Hinder RL Reasoning in LVLMs

Early SFT can induce "pseudo reasoning paths" imitated from expert models, hindering genuine RL progress in Large Vision-Language Models. While SFT teaches format, GRPO-based RL with mixed rewards fosters more adaptive reasoning, per recent research.

Links:

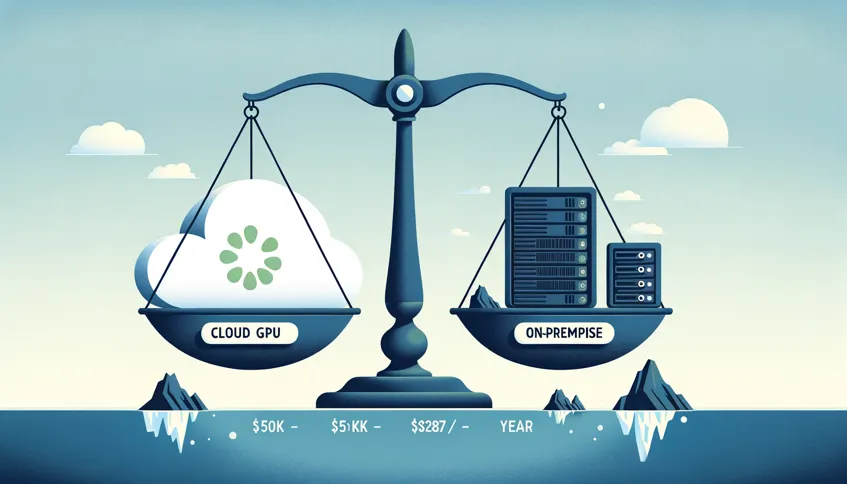

Building High VRAM Rigs with MI50 GPUs

Achieve 160GB VRAM under $1200 using AMD MI50 GPUs (~$90 each) in Octominer cases. Requires ROCm 6.3.0 for MI50 support. Llama.cpp benchmarks show ~5 t/s on Llama3.3 70B Q8 across 5x MI50 (RPC), limited by prompt processing and RPC overhead.

Links:

Local Agent Orchestration with LocalAGI

LocalAGI enables building and running AI agent workflows locally, using any OpenAI-compatible API (like LocalAI) for LLM access. Features WebUI, tool use, connectors (Telegram, Discord), persistent memory via LocalRecall, and Go backend. MIT licensed.

Links: